“Musical quality above genre coherence”

Pleased to announce that my pals Jake and Jesse and I will be hosting a local version of the venerable Beat Research.

On the first and third Wednesdays of the month we’ll deliver a few hours of experimental party music at Villain’s in the South Loop.

Jake (DJ C) started Beat Research in Boston in 2004 with Antony Flackett (DJ Flack) as a base for the genre-bending sets of music the two had been playing since the late ‘90s.

Since inception, Beat Research has hosted some of the best and brightest DJs and producers of underground bass music in the world, given a number of young luminaries their first gigs, and presented an utterly motley collection of tech-addled live performances. The long list of special guests includes DJ Rupture, Kingdom, Eclectic Method, Ghislain Poirier, Vex’d, edIT, and Scuba.

DJ C has since moved to Chicago (replaced by the incomparable Wayne Marshall) and, having missed those heady days in the Beat Research DJ booth, didn’t need much encouragement to be persuaded that this city could use its own explorations into innovative dance music.

Beat Research has been hard to match for anyone seeking out extraordinary sounds. Now Chicagoans can look forward to their own weekly session for discerning dancers and enthusiastic head-nodders.

More information and a mailing list sign-up at Beat Research Chicago. Toots at @beatresearch.

See you there, Chicago.

Giftmix 2011

Pretty sure there’s at least a few naughty among you, but here’s the annual giftmix just the same. Happy holidays and best in 2012, friends.

Tracks:

This City Is Killing Me – Dusty Brown

Seeing the Lines – Mr. Projectile

Futureworld – Com Truise

Unbank – Plaid

Daydream – Tycho

German Clap – Modeselektor

Spacial – Tevo Howard*

Rubin – Der Dritte Raum

Human Reason – Adam Beyer

Planisphere – Justice

Genkai (1) – Biosphere

* Chicago artist

Kid in a treehouse

This past weekend was our annual neighborhood party, Retro on Roscoe. It’s a all-out fest that ignites the blocks around it for 48 hours. As such, some friends of ours host an annual pre-party that spans all five of their contiguous backyards — something you don’t often see in the city.

I was asked to DJ the party whose theme this year was “Boogie Nights”, basically 70’s tunes infused with other tracks that carry forward the ethos from that era. The booth was a treehouse smack in the middle of the party which we outfitted tip-to-tail with music and lighting gear. I played from 8pm to 3am and, though I almost wet myself (with no backup until late), it was an astounding event.

I had a stripped-down setup (compared to, say, Christmas Party), but it was ample. Just the Macbook Pro running Ableton Live, a Native Instruments Audio Kontrol multichannel interface, and an Akai APC40 control surface. The Akai was perfect, a monome-esque grid with the knobs and sliders of a Novation SL Remote — though far sturdier.

I had hundreds of song fragments pre-loaded and warped — something that gave me almost unlimited flexibility to respond to the crowd without letting things drop. I even entertained requests from those brave enough to scale the nearly vertical steps.

I didn’t want to come down. Why? Let’s recap. I was in a kickass treehouse with another geek (Tom, on lights), a good friend, and a cooler of beer. We were controlling several hundred watts of music and commanding the best view of the space. Sure, we were one chain link’s failure away from death by falling steel, but if that’s how I was supposed to go, I wouldn’t complain.

A few have asked for a recording from the night. I’m still cleaning up most of it, but here’s an excerpt from when I think the most people were out in the yard dancing. (Specifically the slightly sped-up Diana Ross with a few claps and bloops layered on top was the pinnacle, if memory serves.)

[Download the DJ set. Full photoset here.

PS – I’m two-for-two luring Chicago’s finest with low frequencies. I suppose next Christmas we’ll go for the hat trick.

‘Bout damn time

It’s out. Jesse’s posted his 2009 Inauguration Mix.

Superb track curation, tasty scratches, all mixed live like a guy who knows what the hell he’s doing.

My contribution? I am a spectral fraction of the crowd-cheering waveform from the Grant Park speech layered in. So, yeah, I’ll be demanding royalties.

Pull it down and turn it up.

OO5

A short note to let you know that I am pleased to be part of the 2009 incarnation of Out of 5, a weekly theme-based mix site put together by Andrew and nine of his pals. The mixes are only up for a week, so grab them while you can. (Alerts via toot, here.)

This week’s theme: songs about resurrection, coming back to life, returning, resurfacing. Perhaps a theme more suited to April and T.S. Eliot’s The Wasteland, but we have to stay optimistic here in Chicago, spiritual home of seasonal affective disorder.

And yes, it took every shred of restraint not to submit the theme music to Return of the Living Dead, obviously.

Now just to kickstart breakbeatbox and we’ll be off to the races.

Christmas in hell

There are many ways to ruin Christmas. Crappy gifts, drunken co-workers, eye-searing sweaters, family itself. (Hollywood makes a mint on the mini-genre of mirth-to-misery.)

But I’d like to go deeper than that. I’d like to attempt to scar your subconscious. I want to slice into that corner of your childhood memories that is still very fond of Christmas music. Sure, you say you hate these tunes now, that the infernal jingling-bells-makes-a-song-Christmassy trick makes you want to gore your ear with a flaming yule brand.

But you lie. And I would like to help you confront that lie, to eradicate the joy.

Back in the 1990’s, in the final supernova of cassette tape usage before its demise at the hands of digital, my pal ASG made me a unique holiday mix: the kristMess tape. Much of it is thick atonal drum-and-bass, but the nugget at the center is the gift beneath the bow. My gift. To you.

O Come All Ye Faithful, excerpt, 2:13

V/VM, The V/VM Christmas Pudding

Feel free to use this liberally throughout the next week as circumstances dictate. If Ebenezer and the Grinch aren’t cutting it for you and holiday horror doesn’t set you back on the right path, just put this on loop and relax. Halloween is only ten months away.

See also: Carbone Dolce

Codemusic

Lately I have been loving a few truly innovative audio apps for the iPhone, none having to do with it being an iPod.

I had always thought that mobile audio creation software were frivolous party tricks. Hey, look at me, I can play Baby Got Back on my 3″-wide keyboard! But that’s changed.

A while back I wrote about an idea for including audio processing code in the header of MP3 files. The premise was that, in addition to creating a music track, the artist would provide parameters for real-time playback modification based on user input, randomness, or anything else. The song would never (or at least wouldn’t ever have to) be the same.

The team at RJDJ have taken this idea to the extreme. The free and pay RJDJ apps in the iTunes store both provide “scenes”, akin to music tracks, complete with artwork. These scenes are nothing but audio processing algorithms.

All input happens via the lavalier microphone on the iTunes earbuds. Basically the scenes take the ambient noise surrounding you and remix it. Some of the scenes do this subtly, some are more musical, but all of them make you the focal point of the remix — not so much a musician as a conductor. I’ve listened to the noise of the L train, walking down the street, and the cacophony of three kids at dinner time. It is completely entrancing. Location-based remixing.

So, to our list of traditional musical interfaces — stick hitting animal skin, horse hair pulled across wire — we add one’s physical movement through life’s soundscape.

Here’s a more musical scene based on my eastward walk through the city a few days ago*. Note especially the interpolation of me almost being hit by a cab crossing Michigan Ave. at 1:16 (red marker on map). The horn makes the piece, in my opinion, but the beauty of this particular scene is how the bleeps and bloops are modulated by the ambient street noise.

Of course this map isn’t connected in any way to playback control, but with the iPhone’s GPS it seems like an obvious evolution of the RJDJ app. The possibilities are many. How about a View in Google Maps button in iTunes? Or a site that aggregates user-created tracks and plots them over one another on a map, a personal-social musical-spatial mashup. Dan Hill’s city of sound, indeed.

——–

There are some other apps of note too.

Bloom is a generative music app from none other than Brian Eno, working with Brian Chilvers. You initiate notes of music by touching the screen. Each note plays and interacts with other notes in expanding concentric circles, like dropping pebbles in a pond. As with scenes in RJDJ, the parameters of note interaction are constrained by “moods”. These are the algorithms that govern the evolution of the sounds you start off. Spore for music. (Not a coincidence that Eno did the music for Spore, of course.)

Ocarina is one of those apps that makes you love the creators for thinking of it. Basically Ocarina turns your iPhone into a high-tech flute. OK, you say, I can see touching the screen like you cover the holes of a woodwind, but where do you blow? Why, the microphone of course! They’ve turned the lack of a wind guard on the iPhone mic into a feature! Light exhalation makes less noise on the mic and produces a lower intensity of the current note combination, and conversely. It’s brilliant really.

——–

* There’s no easy way to export audio from RJDJ, but this handy tool allows you to parse the backup file that the iPhone generates on your machine. You can pluck out the .wav files right from the RJDJ folder.

The Mashability Index

A while back my brother gave me several thousand songs from GoodBlimey.com. Almost all the tracks were mashups. Each song was composed of two songs by two different artists fairly equally smooshed together.

All the track titles were in the A vs. B format (e.g., Black Eyed Peas vs Kraftwerk) — and this gave me an idea.

I exported all the track data as a text file. Then my pal Chris Gansen wrote a script that nuked everything except the two artist names for each track and transformed the data into a spreadsheet like this:

A B 1

A C 1

B D 1

C D 3

C B 2

…

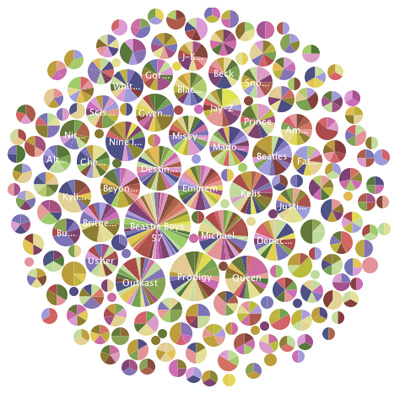

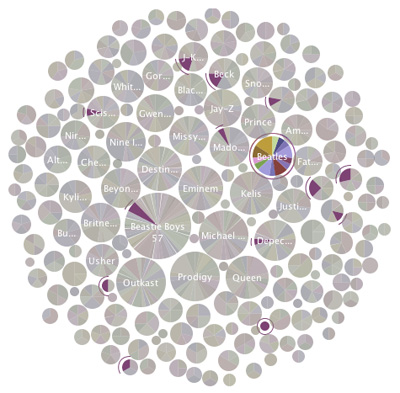

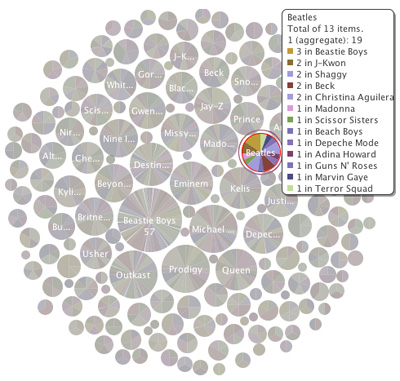

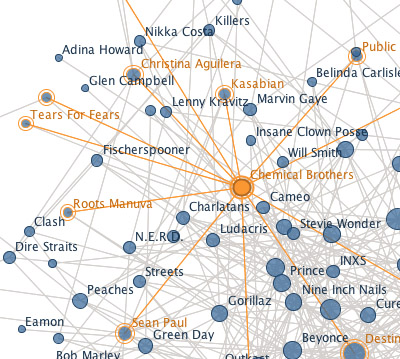

Then it was just a matter of plugging the data into ManyEyes and playing with the visualization types. The best by far is the bubble chart view. (Here’s the interactive chart.)Where the first two columns were artists names and the third column was the number of times they were mashed together in unique songs.

Each circle above represents a single artist. The larger the circle the more other artists the selected artist is mashed with.

The color slices actually tell you at a glance which other artists have been mashed … if you are an autistic savant who can pick out a single color in a sea of several hundred chromatic gradations, that is.

Much easier is clicking a circle which highlights the other artists with which it is mashed.

An alternate view gives the most complete information complete with number of mashed tracks per artist combination.

One other useful view was the network diagram. It shows actual connections between artist combos. The best feature of the diagram is that selected nodes highlight all the other artists with which it is matched. Easy to figure out who’s connected to whom. (Here’s the interactive diagram.)

So what have we learned? Certainly my data set does not contain every mashup ever made. But there were thousands and I think the charts give a good sense which artists mash best (look for the big circles) and mash best with whom.

But there’s far more that could be done. For one, there’s no data in these charts on which songs are being mashed. I have the info — just haven’t figured out how to integrate it. What I really would love to get at is why two artists make sense together. This would require stylistic data, notoriously subjective and consequently unreliable. Still, consider this but a start of the analysis.

Two particular projects influenced my work on this index. The History of Sampling by Jesse Kriss is a bar that I didn’t even come close to hitting, but it provided a great place to aim. And Andy Baio’s analysis of the samples in Girl Talk’s Feed The Animals showed what could be done with an idea, Amazon Turk, and some cool visualizations.

In truth, getting this data into shape was a massive pain in the ass. It was horribly formatted to begin with and took a great deal of kicking and shoving to play nicely with Many Eyes. Above all thanks to Chris — but Jesse Kriss, Frank Van Ham, and Martin Wattenberg of the Many Eyes team deserve applause too.

This is a lot cooler than My Music Genome, isn’t it?

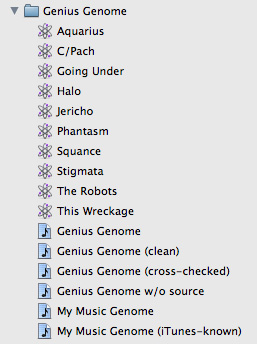

Evolving my music genome

So, iTunes Genius feature, it’s just you and me. Face-to-face. Gloves off. You think you know what I like? OK, you get one track to prove yourself.

No, no, that’s not fair. I’ll give you something really juicy to crunch on. How about you take a playlist that I described a while back as My Music Genome, the very seed (in my human algorithm-based estimation) of the majority of what I listen to now? Musical eugenics.

Oh, you don’t make playlists from other playlists? Only single tracks? Sucks. Fine, let me do this one-by-one. 12 tracks in the list; 25 recommendations per track. Let’s start being genius … Go!

Wait, what’s that? You can only identify 10 of 12 songs in my genome? You’re telling me that you have never heard of Orbital’s Impact or Vapourspace’s magnum opus? You have the Orbital track in your music store, for god’s sake!

OK, fine, go for it with the remaining 10. I’ll wait.

- Going Under – Devo

- The Robots – Kraftwerk

- This Wreckage – Gary Numan

- Squance – Plaid

- Halo – Depeche Mode

- Jericho – The Prodigy

- C/Pach – Autechre

- Stigmata – Ministry

- Aquarius – Boards of Canada

- Phantasm – Biosphere

- Gravitational Arch of 10 – Vapourspace

- Impact (The Earth Is Burning) – Orbital

Cool, 10 new playlists. Let me open them right up. 250 tracks. Subtract the “source” tracks, that gives me 240 songs that you think spring from my base musical tastes. Interesting.

——–

There are plenty of ways I could slice this data — Last.fm tags, AllMusic moods, BPM, waveforms — and I just might. But right now what jumps out at me are the duplicates. That is, the recommendations that come from two or more “source” songs from my genome. This might mean something.

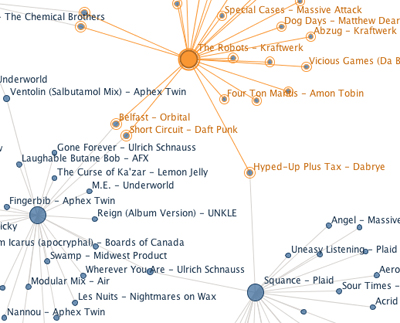

The duplicates are important because they narrow the tree back down. They’re the inbred family members, points where multiple threads of interest converge. (In the image above, Hyped-Up Plus Tax by Dabrye, for instance, is a recommendation generated from both Plaid’s Squance and Kraftwerk’s The Robots.)

The overlaps are few, but meaningful.

- Aftermath – Tricky

- Children Talking – AFX

- Chime – Orbital

- The Curse of Ka’zar – Lemon Jelly

- Dominator [Joey Beltram Mix] – Human Resource

- A Forest [Tree Mix] – The Cure

- Future Proof – Massive Attack

- Gone Forever – Ulrich Schnauss

- Hyped-Up Plus Tax – Dabrye

- Laughable Butane Bob – AFX

- Little Fluffy Clouds – The Orb

- Me? I Disconnect From You – Gary Numan + Tubeway Army

- Mindphaser – Front Line Assembly

- Monkey Gone to Heaven – Pixies

- Paris – MSTRKRFT

- Satellite Anthem Icarus (apocryphal) – Boards of Canada

- Stars – Ulrich Schnauss

- We Are Glass – Gary Numan

A few identifiable strains emerge from this new “evolved” playlist. (These characteristics don’t necessarily reflect the dominant style of the artists themselves, just the tracks, which is more precise anyway.)

- shoe-gazy, downtempo: The Cure, Ulrich Schnauss, Boards of Canada, Dabrye

- hard-edged: AFX (aka Aphex Twin), Human Resource, Front Line Assembly, Pixies, MSTRKKRFT

- genre-benders: Tricky, Lemon Jelly, The Orb

(Not sure where Gary Numan fits in that typology, but he deserves to be in every list as far as I am concerned.)

Wow, John, that’s amazing, you’re thinking. You’ve managed to waste countless hours compiling data to tell yourself that you like soft music, hard music, and music that mixes the two. Such insight!

Actually it is interesting because the artists in this new playlist are some of my most-played. Lemon Jelly, Ulrich Schnauss, and Boards of Canada have been on heavy rotation for years. Clearly they are the fruit of stylistic seeds planted long ago. And now we have something approaching empirical proof. Truth is, most of what I listen to is either ambient or hard-edged or some outlying miscegenation. And there’s plenty of music that doesn’t fall into those categories.

The most interesting data point is that Satellite Anthem Icarus by Boards of Canada is the song that the iTunes Genius most thinks defines my music listening. It is part of multiple playlists generated from the source playlist.

Satellite Anthem Icarus – Boards of Canada

But here’s the crazy thing. That particular track is not the actual Boards of Canada track by the same name. It was included in a partially-bogus torrent download just prior to the official album being released. But I did actually fall in love with it. It is one of my favorite of their tracks. Except that it isn’t theirs. (Full story of this odd situation here.)

So, according to iTunes, the song that most represents the evolution of my musical taste is one that it should by all rights not even know about.

Now this gets to the heart of the mystery surrounding the Genius functionality itself. What exactly is it doing? It recommended this fake song to me which is neither named precisely what the real track is (in my library I have “(apocryphal)” in the title) nor is it the same length. And if by some crazy chance Apple is doing waveform analysis, it sounds nothing like the real version. So how could Genius recommend something that’s iTMS obviously doesn’t have in its library? Related, why would Genius not recognize the Orbital track in my library when I renamed it precisely as it is named in iTMS?

UPDATE: Commenter Pedro helpfully notes that this “fake” is actually Up the Coast by Freescha. Which makes this whole experiment really interesting. I agree with Apple that this song is extremely emblematic of my distilled music tastes, yet as noted above none of the metadata I had would have informed Apple to that. Is it possible that Apple is actually doing music analysis in the manner of Amazon’s text analysis? I really can’t believe that if for no other reason than that the initial Genius scan (when you run 8.0 for the first time) would take forever, which it did not. Still I want to believe. This is the way recommendations should happen.

I really don’t know how the recommendations are being generated, but I do think it is based on something more than store purchase data. Consider the jump from Ministry’s Stigmata to TMBG’s Ana Ng.

Stigmata – Ministry

Ana Ng – They Might Be Giants

There’s pretty much nothing similar between industrial music and irony-laden pop. But these two songs are definitely related when you consider their respective “hooks”: both use heavily-produced, effected, and clipped guitar noises as their main musical trope. Coincidence? Maybe, but why else would they be connected? Not music store data, methinks. Obviously Apple’s exact algorithm is a secret, but I’d love to know more.

——–

Some procedural notes. It helped that I already had a short playlist of stuff I considered influential. (Though I find it a lamentable shortcoming that Genius can’t generate a playlist from a playlist. It would have to infer commonality first then generate a new list. How tasty would that be?)

I then just set Genius to create a new playlist per track. Various recombinations of the playlists yielded a clean list which I flipped into a spreadsheet using the very handy Export Selected Song List AppleScript.

From the spreadsheet data I experimented with and aborted a bunch of different visualization ideas. At one point I had a monstrously large 10-headed Venn diagram in Illustrator that hurt to look at.

Eventually I created the network diagram in the screenshot at the top of this post using the wonderful Many Eyes social visualization site. (Yes, Many Eyes is IBM. Disclose that!)

A fuller, more interactive version of this visualization is available (Safari recommended, if you are on a Mac). Also the source data is there for the playing. I am sure there are other ways to massage it.

Enjoy this level of music nerdery? Dive into the Ascent Stage back catalog:

Call of the wild

In Kenya I stayed in a tent camp — not at all a luxury and a great way to extend the daytime safari thrill of being surrounded by animals. It was a thrill mostly unseen as the night came alive with noises that were always just outside the radius of the feeble gas lanterns around the camp.

Maasai tribesmen, hired by camp, patrolled the grounds at night, but it was still unnerving. Perhaps even more so when I’d start to wonder why we needed guards in the first place.

Cracking branches, rustling in the brush, and occasional screeches in the distance — it all made getting up to take a leak outside the tent at night positively terrifying. In fact the night before I arrived a lion came into camp at night and roared for about twenty minutes. The Maasai said it was just “talking” to its pride.

Coincidentally I had been reading a fascinating survey of 20th century music that mentioned in passing a study by two psychologists exploring the reason that certain musical passages give people the chills.

Their theory? It’s related to the call of the wild, which also explains the feeling of hearing an animal cry in the distance in a dark tent.

In our estimation, a high-pitched sustained crescendo, a sustained note of grief sung by a soprano or played on a violin (capable of piercing the ‘soul’ so to speak) seems to be an ideal stimulus for evoking chills. A solo instrument, like a trumpet or cello, emerging suddenly from a softer orchestral background is especially evocative.

Accordingly, we have entertained the possibility that chills arise substantially from feelings triggered by sad music that contains acoustic properties similar to the separation call of young animals, the primal cry of despair to signal caretakers to exhibit social care and attention. Perhaps musically evoked chills represent a natural resonance of our brain separation-distress systems which helps mediate the emotional impact of social loss.

Put another way, a solo instrument breaking free from the larger family of sound evokes in humans a kind of separation anxiety, an empathetic response that, like separation, is largely fear-based. And this response, the authors posit, is evolutionary. It’s related to animals (or human babies) calling out for attention. The call of the wild is a call of isolation. And isolation is scary.

They continue, attempting to explain the chills further.

In part, musically induced chills may derive their affective impact from primitive homeostatic thermal responses, aroused by the perception of separation, that provided motivational urgency for social-reunion responses. In other words, when we are lost, we feel cold, not simply physically but also perhaps neuro-symbolically as a consequence of the social loss.

“Homeostatic thermal responses” … yes, a hug. Chills as symbolic response to a lack of skin contact with others of your group. (Consider this image of a monkey baby clung to the bottom of its mother.)

See also a podcast from today’s Guardian on related evolutionary insights from music.